Kubernetes Tutorial — Advanced Overview of K8s

Kubernetes is an open source orchestrator for deploying containerized applications. Kubernetes also known as K8s, is an open-source Container Management tool. It provides container runtime, container orchestration, container centric infrastructure orchestration, self healing mechanisms, service discovery, load balancing and container (de)scaling. Initially It was developed by Google for managing containerized applications in clustered environments but later donated to CNCF (Cloud Native Computing Foundation, a child entity of Linux Foundation).

Kubernetes is Written in Go programming language. It is a platform designed to completely manage the life cycle of containerized applications and services using methods that provide predictability, scalability, and high availability.

Container orchestration automates the deployment, management, scaling, and networking of containers across the cluster. It is focused on managing the life cycle of containers. Enterprises that usually deploy and manage hundreds or thousands of Linux containers and hosts can take benefits from container orchestration.

Container orchestration is used to automate the following tasks at scale:

- Configuring and Scheduling of containers

- Provisioning and Deployment of containers

- Redundancy and Availability of containers

- Health monitoring of containers and hosts

- Scaling up or removing containers to spread application load evenly across host infrastructure

- Movement of containers from one host to another if there is a shortage of resources in a host, or if a host dies

- Allocation of resources between containers

- External exposure of services running in a container with the outside world

- Load balancing of service discovery between containers

Kubernetes and Docker Swarm are Container Orchestration tools. Both are used to deploy containers inside a cluster but there are few differences between them.

Online Emulator: https://labs.play-with-k8s.com/

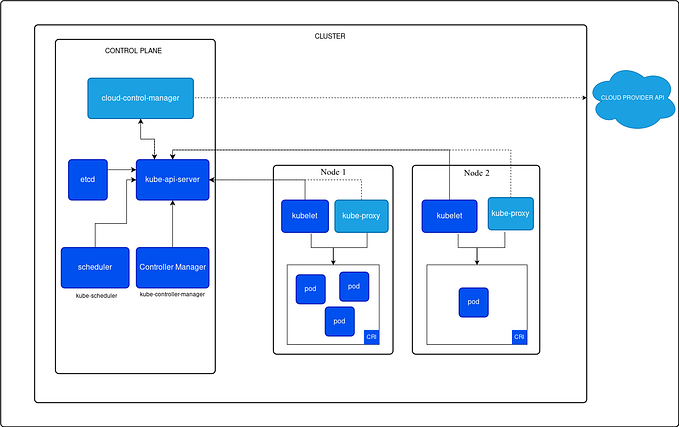

A Kubernetes cluster is a collection of physical or virtual machines and other infrastructure resources that are required to run your containerized applications. Each machine in a Kubernetes cluster is called a Node.

There are two types of Nodes in each Kubernetes cluster.

- Master Node : Hosts the Kubernetes control plane components and manages the Kubernetes Cluster

- Worker Node : Runs your containerized applications

Kubernetes Master is responsible for managing the complete cluster. You can access master node via the CLI, GUI, or API. The master watches over the nodes in the cluster and is responsible for the actual orchestration of containers on the worker nodes For achieving fault tolerance. There can be more than one master node in a cluster. It is the access point from which administrators and other users interact with a cluster to manage the scheduling and deployment of containers.

There are four main components of Master Node or Control Plane in Kubernetes.

Masters communicate with the rest of the cluster through the kube apiserver , the main access point to the control plane. It validates and executes user’s REST commands. Kube apiserver also makes sure that configurations in etcd match with configurations of containers deployed in the cluster.

ETCD is a distributed reliable key value store used by Kubernetes to store all data used to manage the cluster. When you have multiple nodes and multiple masters in your cluster, etcd stores all that information on all the nodes in the cluster in a distributed manner. ETCD is responsible for implementing locks within the cluster to ensure there are no conflicts between the Masters.

The controllers are the brain behind orchestration. They are responsible for noticing and responding when nodes, containers or endpoints goes down. The controllers makes decisions to bring up new containers in such cases. The kube controller manager runs control loops that manage the state of the cluster by checking if the required deployments, replicas, and nodes are running in the cluster.

The scheduler is responsible for distributing work or containers across multiple nodes. It looks for newly created containers and assigns them to Nodes.

Kubernetes worker node contains below services.

Worker nodes have the kubelet agent which interacts with master to provide health information of the worker node. To carry out actions requested by the master on the worker nodes. Kubelet acts as the node agent responsible for managing the lifecycle of every worker node.

Kube Proxy is responsible for ensuring network traffic is routed properly to internal and external services as required and is based on the rules defined by network policies in kube controller manager and other custom controllers. It manages the network rules on each node. It also performs connection forwarding or load balancing for Kubernetes cluster services.

A container runtime is a CRI (Container Runtime Interface) compatible application that executes and manages containers.

Hence you got better understanding of Kubernetes. In our upcoming tutorials, we will install and setup kubernetes clusters on different platforms. Stay tuned for other DevOps tutorials.

Read Also : https://thecodecloud.in/kubernetes-tutorial-advanced-overview-of-k8s/

Read Also : An Advanced Overview of Docker

Originally published at https://thecodecloud.in on October 12, 2020.